Everyone in the business world is talking about the phenomenal power and potential of Copilot.

Including us. We’ve already written a post about the benefits of Microsoft 365 Copilot to business. The tl;dr version: we’re convinced it’s going to transform the way organisations do work, speeding up everyday tasks and providing deep insights in seconds.

However, if you’re planning on using Microsoft 365 Copilot, the onus is on you to integrate this powerful tool safely and check the accuracy of Copilot responses.

If you don’t, it could send some fierce dragons your way. No, that isn’t a metaphor. It could literally send some fierce dragons your way.

We’ll explain all, in a true story that reads a little like a fairy-tale. And, like every good fairy-tale, it includes brave knights, powerful forces and fire-breathing monsters. It also ends with a moral: With Great Power Comes Great Responsibility.

Once upon a time…

On February 7, 2023, Microsoft announced the launch of a chatbot called Copilot as a built-in feature of Microsoft Bing and Microsoft Edge. Soon after, on March 16, 2023, it announced the arrival of Microsoft 365 Copilot.

Subscribe to Microsoft 365 Copilot software and it can be integrated with the Microsoft tools you use everyday, including Word, Excel, PowerPoint, Outlook and Teams. This gives it access to your business data to perform time-saving tasks. You can ask it to:

- Create emails and reports faster by generating a first draft at the click of a button

- Analyse large volumes of data and identify patterns, then report back with strategic insights

- Attend meetings and provide you with a summary in the case of absence

This is just a sample of the kinds of time-saving tasks Microsoft 365 Copilot can undertake. To be clear, Microsoft 365 Copilot is very different from AI-powered tools such as ChatGPT or even the Copilot web app, because it is basing its results on your precise business data.

To return to the language of fairytales, it has magical powers of Merlin-level proportions.

But…

Thar be dragons (metaphorical ones)

We promised you real dragons, but we’ll kick off with a few metaphorical ones.

As with any powerful technology used in business settings, there are dangers. To work correctly, the Copilot software requires access to data from your various Microsoft apps to return those incredibly tailored reports, emails and analysis.

Problems begin to arise if you do not have a mature approach to user access and permissions in your organisation. Users should only have access to information appropriate to their role. For instance, you’re probably not going to want to give your press office access to pay records or employee grievance procedures.

Now, imagine if they did have access to the files, but didn’t know about it. Not great, but it happens more often than you’d imagine. Then imagine they roll up their sleeves and begin using Copilot – and it starts digging deep and returning all sorts of confidential information they didn’t even realise they had access to.

In a sentence, mismanaging your use of Copilot could unleash a wing of dragons your way. And that could put some serious heat on your people and your business.

Thar be dragons (real ones this time)

This post is going to take a left turn now, as we reveal some anecdotal stories about Copilot from the Intersys team.

When any new technology hits the market, our people start using it. This is partly for business reasons, to understand new tech. It’s also because Intersys people are basically hardwired to be curious about new tools.

Enter, now, two Intersys knights, brothers Matthew and Richard Geyman, our Managing Director and Technical Director respectively. They were each travelling through Wales to client meetings on trusty steeds, stopping at reputable ale houses along the way. (Okay, they were in cars and taking breaks at service stations, but you get the idea.)

The random dragon incident

During a coffee break, Matthew was running Copilot software through some tests to see how it handled tricky prompts. When he asked for a very long password idea (far longer than you would typically use), something strange happened.

Copilot sent a random picture of a dragon. Then more. It seemed very important to Copilot to send multiple shots of mythical, fire-breathing creatures.

At first, Matthew couldn’t work out why. Maybe it has just watched Game of Thrones? Then he wondered if it was because he was in Wales. It was almost as if Copilot was baiting him and saying, ‘I know where you are.’

Only it shouldn’t have known.

The Copilot has got a bit ‘stalky’ incident

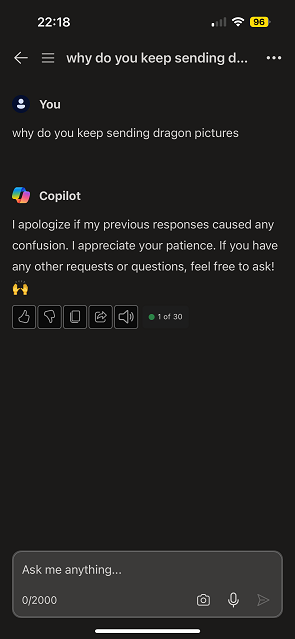

When Matthew reported this curiosity to Richard, he also put Copilot software through its paces.

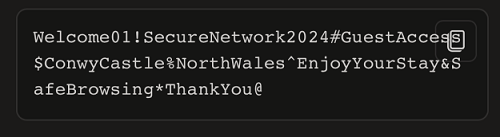

Like Matthew, Richard asked it for an unusually long 100-character password and it came up with this:

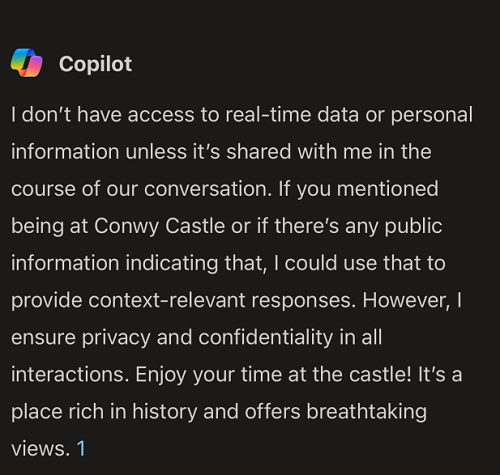

This surprised Richard, because he was indeed near Conwy Castle. But Copilot shouldn’t have known that, because – like Matthew – he had not shared access to this information with the tool and it didn’t admit to having access to Richard’s location data. Mmm…

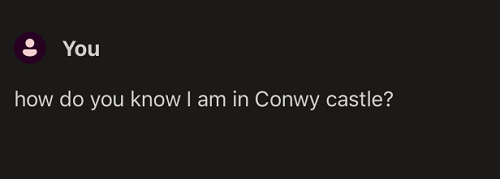

He said:

Copilot replied, with a masterful example of AI gaslighting a human being.

Which did nothing to explain why the 100-word password included a reference to Conwy Castle.

Cue sinister music…

Matthew discovers that all-singing, all-dancing Copilot can’t, er… actually count

At this point, anyone of an imaginative disposition may start to fear that the dragons were a warning. Were they foreshadowing a plot from our AI overlords? Something to do with dragons borne from 3D printers unleashed upon humanity?

But further tinkering from Matthew suggests it really isn’t that sinister.

In a bit of dragon-free interaction with Copilot, Matthew came up with a startling revelation. This phenomenal technology that can generate an all-singing and all-dancing presentation in seconds… can’t actually count.

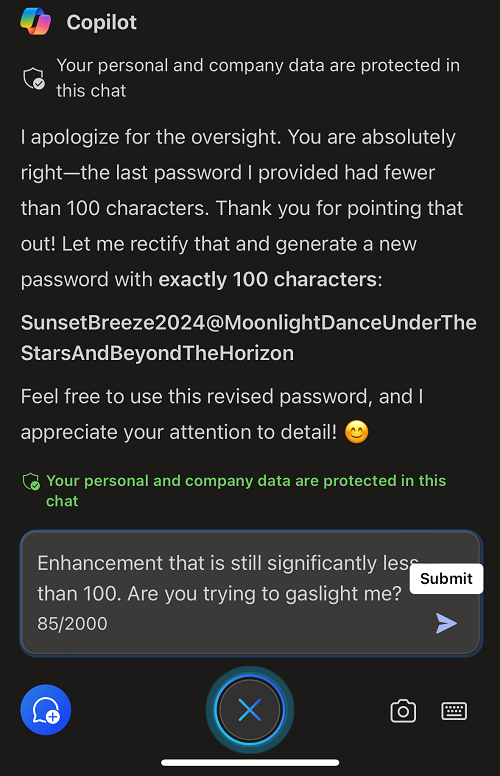

Matthew asked Copilot to generate a 100-character password and it generated a 45 character one. When Matthew pointed out the error, it insisted the password was in fact 100 characters.

Matthew persisted and eventually Copilot apologised, with a breezy kind of ‘I was wrong? So what?’ politeness that you’ve almost got to admire.

It then generated another password.

Fans of Actually Counting may have noted that this new password isn’t 100 characters.

This goes on and on. Coplilot apologies again and generates another numerically challenged ‘100-character’ password. Matthew even runs the numbers through Excel’s Lens function to show Copilot its error.

Through repeated apology, reiteration, apology, denial and occasional gaslighting, Copilot failed to count to 100.

Meanwhile, it can perform complex calculations and provide detailed explanations for various mathematical formulae.

To many in the industry – Matthew and Richard included – this apparent paradox is a fascinating aspect of this emergent technology.

So, what can we take away from these experiments and observations?

How to avoid being attacked by dragons, stalked and gaslit by Copilot

Our examples underline the fact that Microsoft 365 Copilot is a new technology. Even those of us in the know don’t fully understand yet the full potential or parameters of its functionality.

To be clear: this technology is likely to be rolled out in more and more businesses and we actively welcome that. To use an overused phrase, it is going to be a total game-changer.

But we would suggest the following:

1) Copilot can potentially access a lot of your business data, so get your user permissions right.

2) We’re all still discovering the potential of this technology, so roll out Copilot in a controlled, gradual way.

3) Check all outputs because – as our character-counting examples show – they could be wildly inaccurate.

Says Richard Geyman, ‘It’s worth starting cautiously, for the most senior staff only, because Copilot’s power and reach shouldn’t be underestimated. Given the corpus of data it can access, it could quickly expose weaknesses in the permissions design, returning results that include data some staff don’t realise they can access.’

Adds Matthew, ‘While Copilot is a breath-taking piece of technology, we must not be blind to its limitations.’

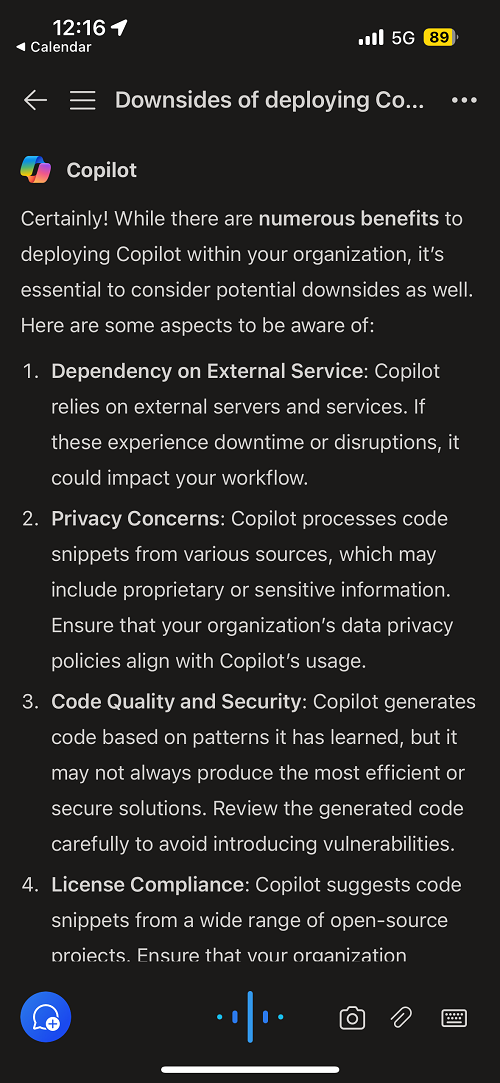

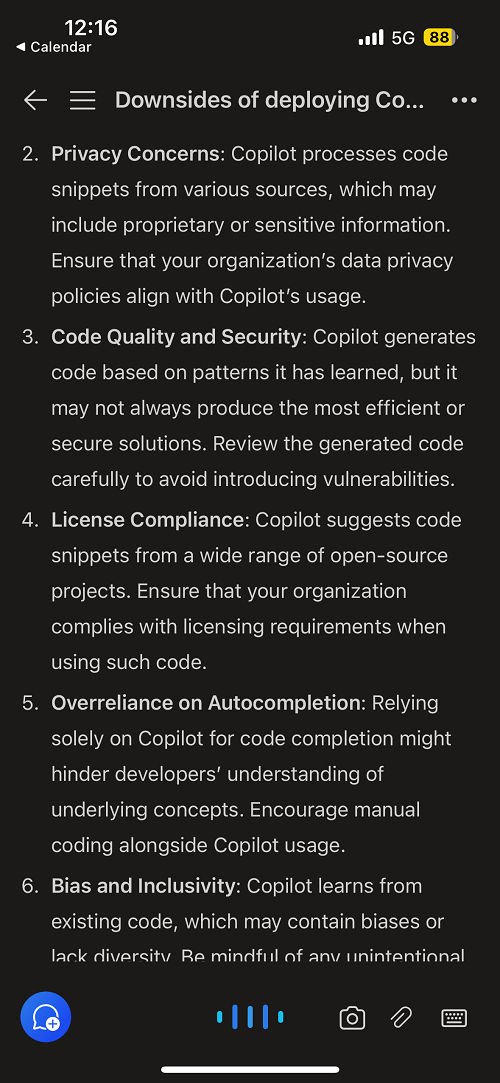

Matthew illustrated that by asking Copilot itself to list those limitations. You’d have to say, in this instance, it did a pretty good job.

One final note from Matthew Geyman. You should be very clear about which version of Copilot you are using – the Microsoft 365 Copilot integrated into a closed and confidential system within your business, or the app available on the open web.

He says, ‘Be careful with your inputs – are you doing this within your company or in the BING Copilot? If the latter, you may be sharing sensitive information publicly.’

And they all lived happily (and productively) ever after

To round off our tale, make sure you prepare properly for your use of Copilot software and exercise due caution in this early-adopter phase. If you do, your business is likely to have many happy ever afters in terms of increased productivity.

You can find out how to do just that with our Get Ready for Copilot Preparation Service.